How to Delete Old Data From an Azure Storage Table : Part 1

/Have you ever tried to purge old data from an Azure table? For example, let's say you're using the table for logging, like the Azure Diagnostics Trace Listener which logs to the WADLogsTable, and you want to purge old log entries.

There is a suggestion on Azure feedback for this feature, questions on Stack Overflow (here's one and another), questions on MSDN Forums and bugs on Github.

There are numerous good articles that cover deletion of entities from Azure Tables.

However, no tools are available to help.

UPDATE: I’m creating a SaaS tool that will automatically delete data from your Azure tables older than X days so you don’t need to worry about building this yourself. Interested? Sign up for the beta (limited spots available):

TL;DR: while the building blocks exist in the Azure Table storage APIs to delete entities, there are no native ways to easily bulk delete data, and no easy way to purge data older than a certain number of days. Nuking the entire table is certainly the easiest way to go, so designing your system to roll to a new table every X days or month or whatever (for example Logs201907, Logs201908 for logs generated in July 2019 and August 2019 respectively) would be my recommendation.

If that ship has sailed and you stuck in a situation where you want to purge old data from a table, like I was, I created a tool to make my life, and hopefully your life as well, a bit easier.

The code is available here: https://github.com/brentonw/AzureTablePurger

Disclaimer: this is prototype code, I've run this successfully myself and put it through a bunch of functional tests, but at present, it doesn’t have a set of unit tests. Use at your own risk :)

How it Works

Fundamentally, this tool has 2 steps:

Enumerates entities to be deleted

Delete entities using batch operations on Azure Table Storage

I started off building a simple version which synchronously grabs a page of query results from the API, then breaks that page into batches of no more than 100 items, grouped by PartitionKey - a requirement for the Azure Table Storage Batch Operation API, then execute batch delete operations on Azure Table Storage.

Enumerating Which Data to Delete

Depending on your PartitionKey and RowKey structure, this might be relatively easy and efficient, or it might be painful. With Azure Table Storage, you can only query efficiently when using PartitionKey or a PartitionKey + RowKey combination. Anything else results in a full table scan which is inefficient and time consuming. There is tons of background on this in the Azure docs: Azure Storage Table Design Guide: Designing Scalable and Performant Tables.

Querying on the Timestamp column is not efficient, and will require a full table scan.

If we take a look at the WADLogsTable, we'll see data similar to this:

PartitionKey = 0636213283200000000RowKey = e046cc84a5d04f3b96532ebfef4ef918___Shindigg.Azure.BackgroundWorker___Shindigg.Azure.BackgroundWorker_IN_0___0000000001652031489

Here's how the format breaks down:

PartitionKey = "0" + the minute of the number of ticks since 12:00:00 midnight, January 1, 0001

RowKey = this is the deployment ID + the name of the role + the name of the role instance + a unique identifier

Every log entry within a particular minute is bucketed into the same partition which has 2 key advantages:

We can now effectively query on time

Each partition shouldn't have too many records in there, since there shouldn't be that many log entries within a single minute

Here's the query we construct to enumerate the data:

public TableQuery GetTableQuery(int purgeEntitiesOlderThanDays) { var maximumPartitionKeyToDelete = GetMaximumPartitionKeyToDelete(purgeEntitiesOlderThanDays); var query = new TableQuery() .Where(TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.LessThanOrEqual, maximumPartitionKeyToDelete)) .Select(new[] { "PartitionKey", "RowKey" }); return query; } private string GetMaximumPartitionKeyToDelete(int purgeRecordsOlderThanDays) { return DateTime.UtcNow.AddDays(-1 * purgeRecordsOlderThanDays).Ticks.ToString("D19"); }

The execution of the query is pretty straightforward:

var page = TableReference.ExecuteQuerySegmented(query, continuationToken);

The Azure Table Storage API limits us to 1000 records at a time. If there are more than 1000 results, the continuationToken will be set to a non-null value, which will indicate we need to make the query again with that particular continuationToken to get the next page of data from the query.

Deleting the Data

In order to make this as efficient as possible and minimize the number of delete calls we make to the Auzre Table Storage API, we want to batch things up as much as possible.

Azure Table Storage supports batch operations, but there are two caveats we need to be aware of (1) all entities must be in the same partition and (2) there can be no more than 100 items in a batch.

To achieve this, we're going to break our page of data into chunks of no more than 100 entities, grouped by PartitionKey:

protected IList<IList<DynamicTableEntity>> GetPartitionsFromPage(IList<DynamicTableEntity> page) { var result = new List<IList<DynamicTableEntity>>(); var groupByResult = page.GroupBy(x => x.PartitionKey); foreach (var partition in groupByResult.ToList()) { var partitionAsList = partition.ToList(); if (partitionAsList.Count > MaxBatchSize) { var chunkedPartitions = Chunk(partition, MaxBatchSize); foreach (var smallerPartition in chunkedPartitions) { result.Add(smallerPartition.ToList()); } } else { result.Add(partitionAsList); } } return result; }

Then, we'll iterate over each partition, construct a batch operation and execute it against the table:

foreach (var entity in partition) { Trace.WriteLine( $"Adding entity to batch: PartitionKey=, RowKey="); entityCounter++; batchOperation.Delete(entity); batchCounter++; } Trace.WriteLine($"Added items into batch"); partitionCounter++; Trace.WriteLine($"Executing batch delete of entities"); TableReference.ExecuteBatch(batchOperation);

Here's the entire processing logic:

public override void PurgeEntities(out int numEntitiesProcessed, out int numPartitionsProcessed) { VerifyIsInitialized(); var query = PartitionKeyHandler.GetTableQuery(PurgeEntitiesOlderThanDays); var continuationToken = new TableContinuationToken(); int partitionCounter = 0; int entityCounter = 0; // Collect and process data do { var page = TableReference.ExecuteQuerySegmented(query, continuationToken); var firstResultTimestamp = PartitionKeyHandler.ConvertPartitionKeyToDateTime(page.Results.First().PartitionKey); WriteStartingToProcessPage(page, firstResultTimestamp); var partitions = GetPartitionsFromPage(page.ToList()); ConsoleHelper.WriteLineWithColor($"Broke into partitions", ConsoleColor.Gray); foreach (var partition in partitions) { Trace.WriteLine($"Processing partition "); var batchOperation = new TableBatchOperation(); int batchCounter = 0; foreach (var entity in partition) { Trace.WriteLine( $"Adding entity to batch: PartitionKey=, RowKey="); entityCounter++; batchOperation.Delete(entity); batchCounter++; } Trace.WriteLine($"Added items into batch"); partitionCounter++; Trace.WriteLine($"Executing batch delete of entities"); TableReference.ExecuteBatch(batchOperation); WriteProgressItemProcessed(); } continuationToken = page.ContinuationToken; } while (continuationToken != null); numPartitionsProcessed = partitionCounter; numEntitiesProcessed = entityCounter; }

Performance

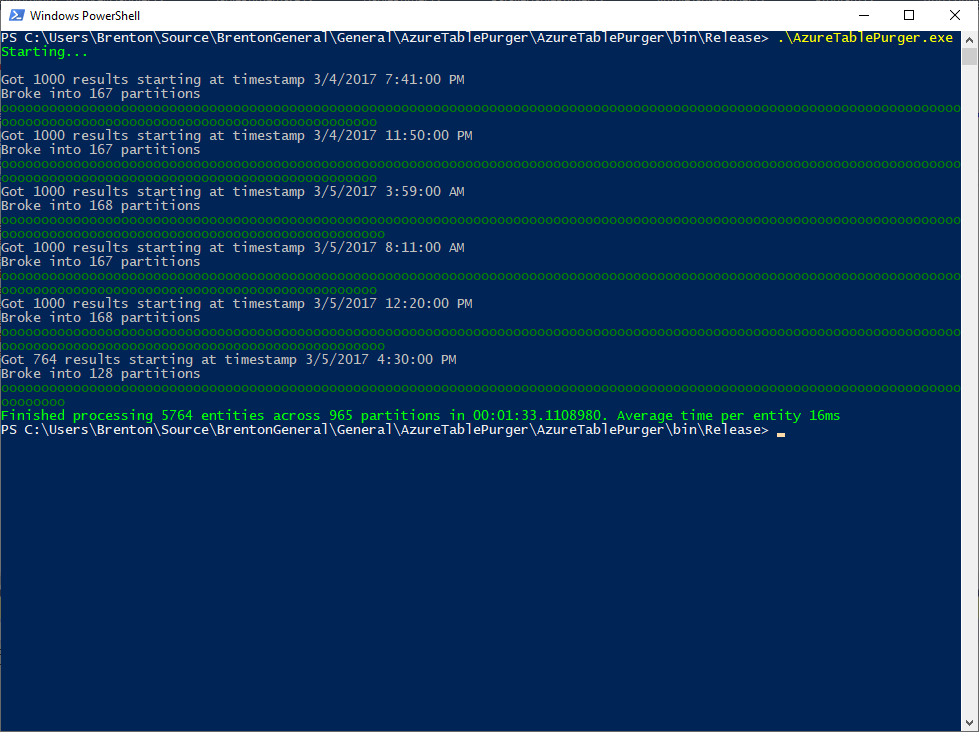

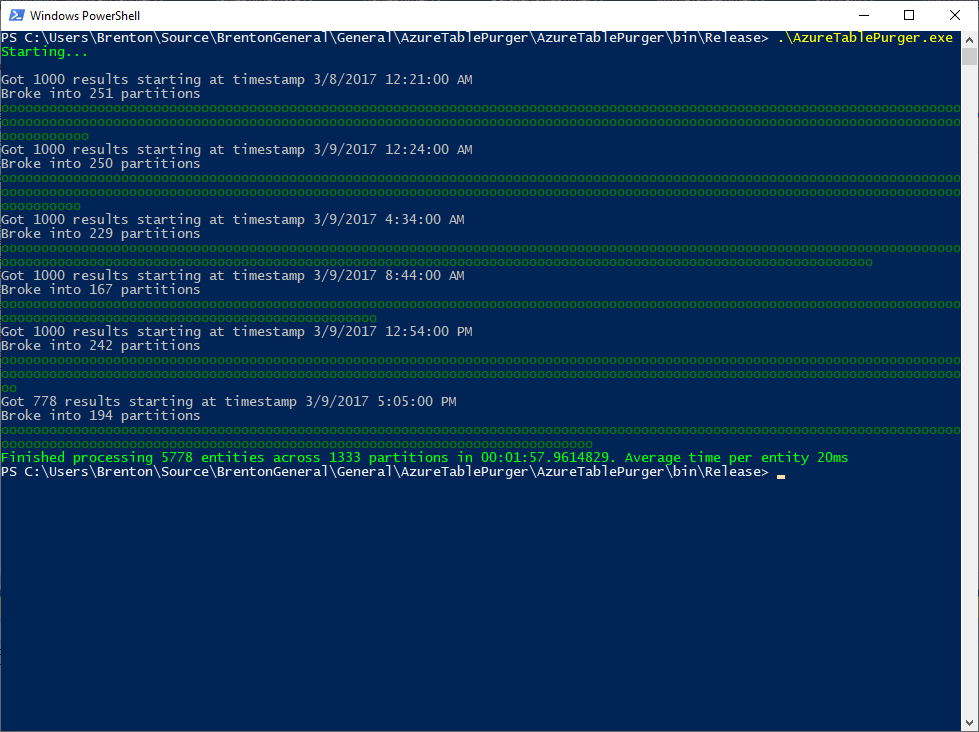

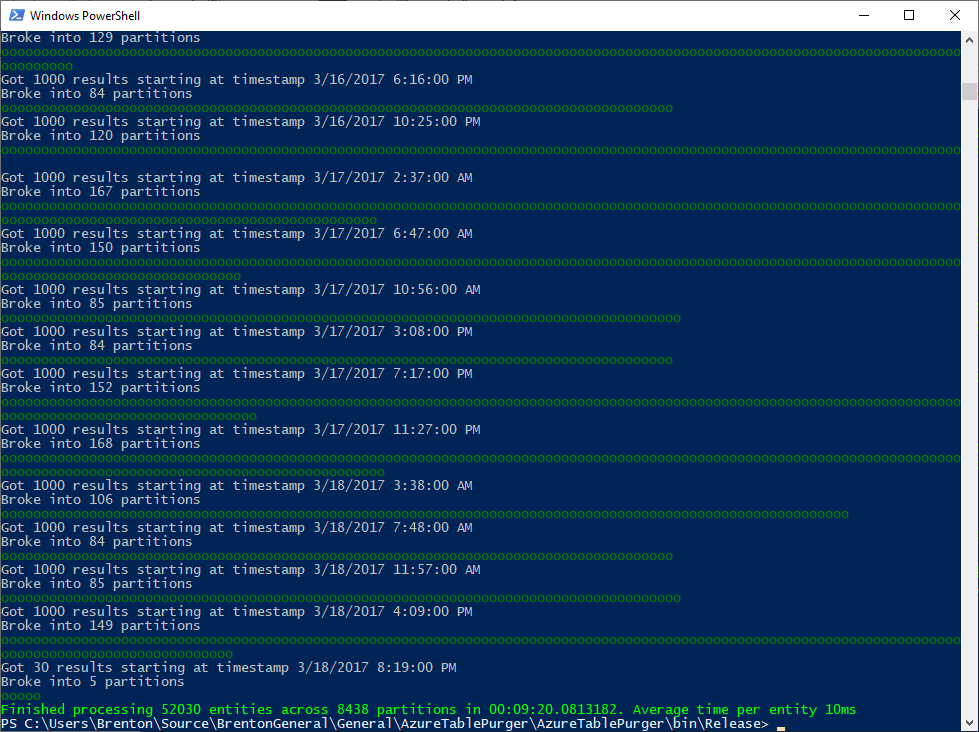

I ran this a few times on a subset of data. On average it takes around 10-20ms depending on the execution run to delete each entity:

This seemed kind of slow to me, and when trying to purge a lot of data, it takes many hours to run. I figured I should be able to improve the speed dramatically by turning this into a parallel operation.

Stay tuned for part 2 of this series to dive deeper into the parallel implementation.

Conclusion for Part 1

Since Azure Table Storage has no native way to purge old data, your best best is to structure your data so that you can simply delete old tables when you no longer need them and purge data that way.

However, if you can’t do that, feel free to make use of this AzureTablePurger tool.